Developing distributed algorithms is not particularly easy. Doing it in the kernel instead of in user space is even harder. Chasing low probability race conditions which cause data corruptions is just madness.

Our solution to this problem is extensive testing using user space simulators. Here are the basic steps we are using for this:

Data Services development and testing:

- Data services components are black boxed and incorporated in the client’s kernel module. This module is loaded into a kernel simulator.

- A kernel simulator is a user space application that simulates a Linux kernel. There are off-the-shelf solutions to run a Linux kernel in user space but they have many drawbacks: they are large, slow, and do not allow enough control by unitests. At Excelero, we wrote a basic kernel simulator from scratch that only implements the methods that the module accesses: memory management, work queues, virtual CPUs, timers, linked lists, block API, etc. The kernel simulator is highly configurable by the uni-testing environment, allowing it to stop specific CPUs, reschedule jobs in different fashion to reproduce race conditions, track memory allocations, force it to issue specific IO patterns and much more.

- Data services now run in the user space but they still need all other components to function properly. These components are developed by other teams. To solve this, we use a simulator for each component. For example, we simulate NVMe disks using ram-disks. We simulate network messages, the topology manager, databases that hold the configuration, applications that issue IOs, NVMe–errors, etc.

- All the simulators described above have a dual API. In other words, they implement exactly the set of functions and behaviors of the real components, but they also implement an API towards the uni-test environment. For example, the disk simulator supports a regular API of the NVMe-driver, but also has additional functions like insert_bad_sector(), inject_random_failure() and disconnect_from_client(). The Toma simulator implements exactly the correct messaging protocol with the client but also has functions like: inject_false_message(), ignore_next_clients_message(), force_reboot()

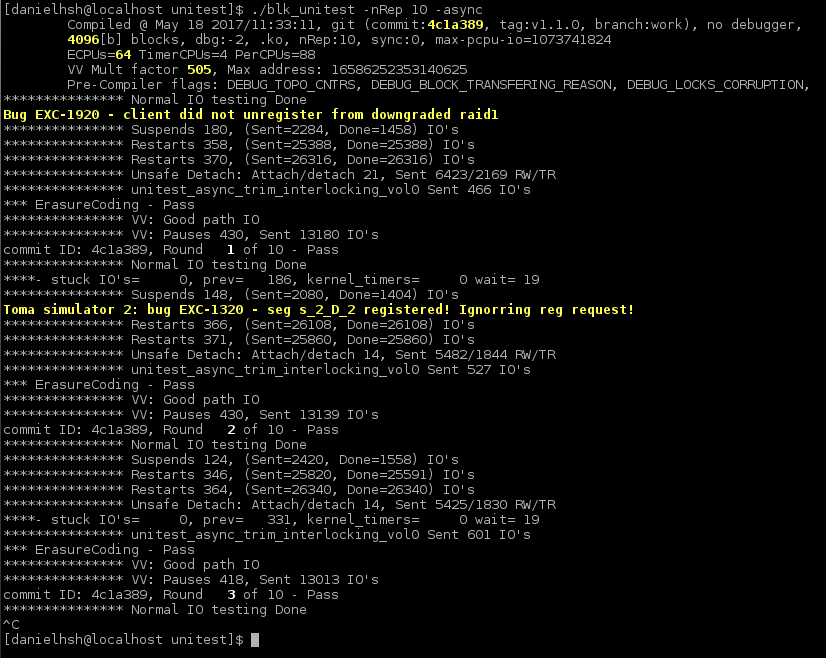

- Once all the simulators with the data services production code are loaded, the uni-testing environment really shines. It accesses the dual API of the simulators (as described above) to craft specific test scenarios.

- Example of one scenario (IO to RAID1): Issue random writes to the client, order one disk to disconnect, order the cluster manager to move client into degraded mode, now freeze everything and verify that the data of the pair of disks in RAID1 matches. Failure of this scenario means data corruption.

- As our development goes, more scenarios are added (and never removed), thus broadly covering more features and more information flows. We also use random scenarios to detect difficult corner cases.

- Our unitest environment has an immense power because it runs many orders of magnitudes faster than real world machines. Even in a short – few minutes – run, it can perform a test that simulates years of typical usage on a customer’s site. One example is a scenario of shutting down a server while IO is in the air. This scenario (while running on my laptop) for 1 second can typically issue ~200K IO/s over 6 simulated disks and ~400 reboots of the simulated server. Just for comparison, a typical server can take 30 seconds to several minutes to reboot while the simulated one does it in ~2 milliseconds.

- We run these unitests overnight for every git commit and they do not require any specialized hardware. If a scenario fails, the testing environment automatically puts a break point and the developer can continue to debug the live run. Unlike typical kernel debugging (add prints to logs and analyzing post mortem) we are able to inspect the problems exactly when they occur.

To summarize the above: our unitest environment is designed for extensive testing, provides full power of user space debugging and guarantees that our customers will receive the highest possible quality data services.

Interesting Note: the testing mechanism I described above is used in the R&D teams and each commit passes countless amount of test cycles before it is submitted to QA team. Thus, QA engineers are relieved of dealing with trivial bugs and can concentrate on the extremely difficult test scenarios.

By Daniel Herman Shmulyan: Data Services Team leader (LinkedIn)