Five Questions with SciNet’s CTO Danny Gruner

High-performance computing is one of the most exciting application categories for production NVMe deployments. In this second of a series of blog posts, in which we revisit customer successes that have been in production over a longer period of time, Excelero spotlights SciNet, Canada’s largest supercomputer center that provides Canadian researchers with computational resources and expertise necessary to perform their research at massive scale. The center helps power work from the biomedical sciences and aerospace engineering to astrophysics and climate science.

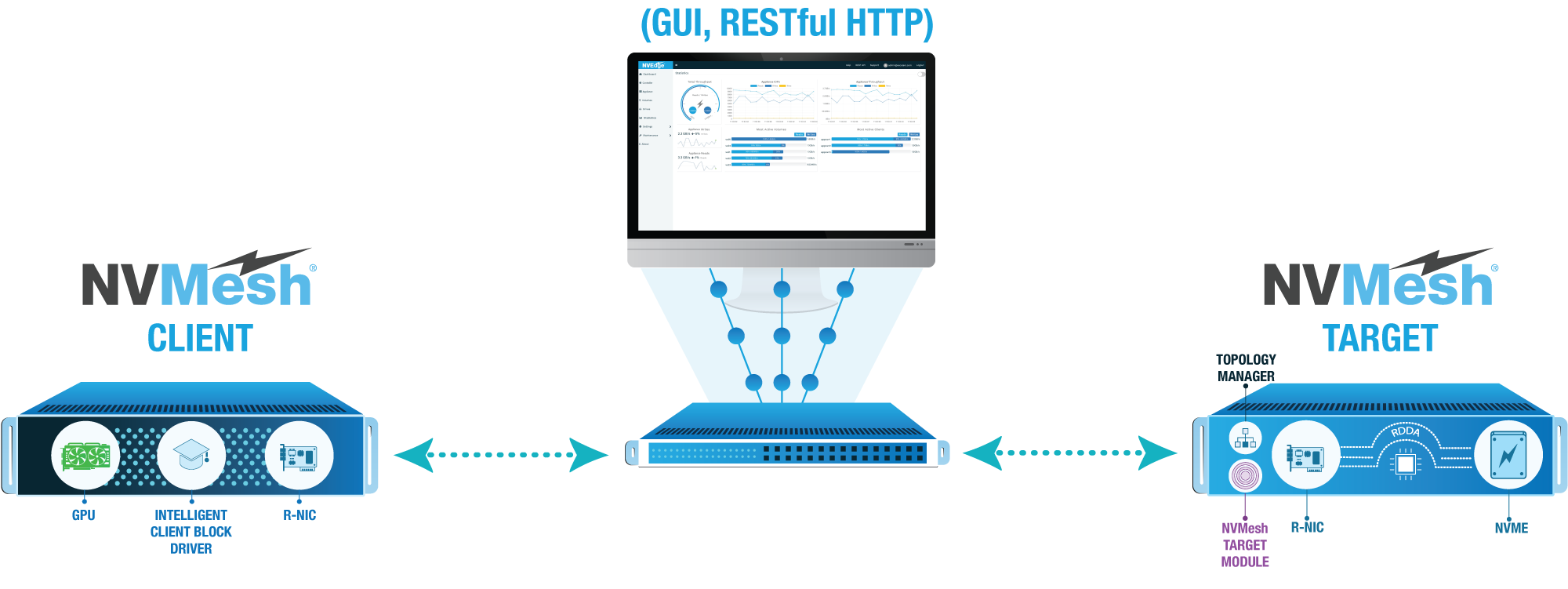

We caught up with SciNet’s Dr. Daniel Gruner, chief technical officer of the SciNet High-Performance Computing Consortium, about the ways that Excelero NVMesh® helps SciNet achieve unheard-of bandwidth, extremely cost-effectively.

1). Describe your application.

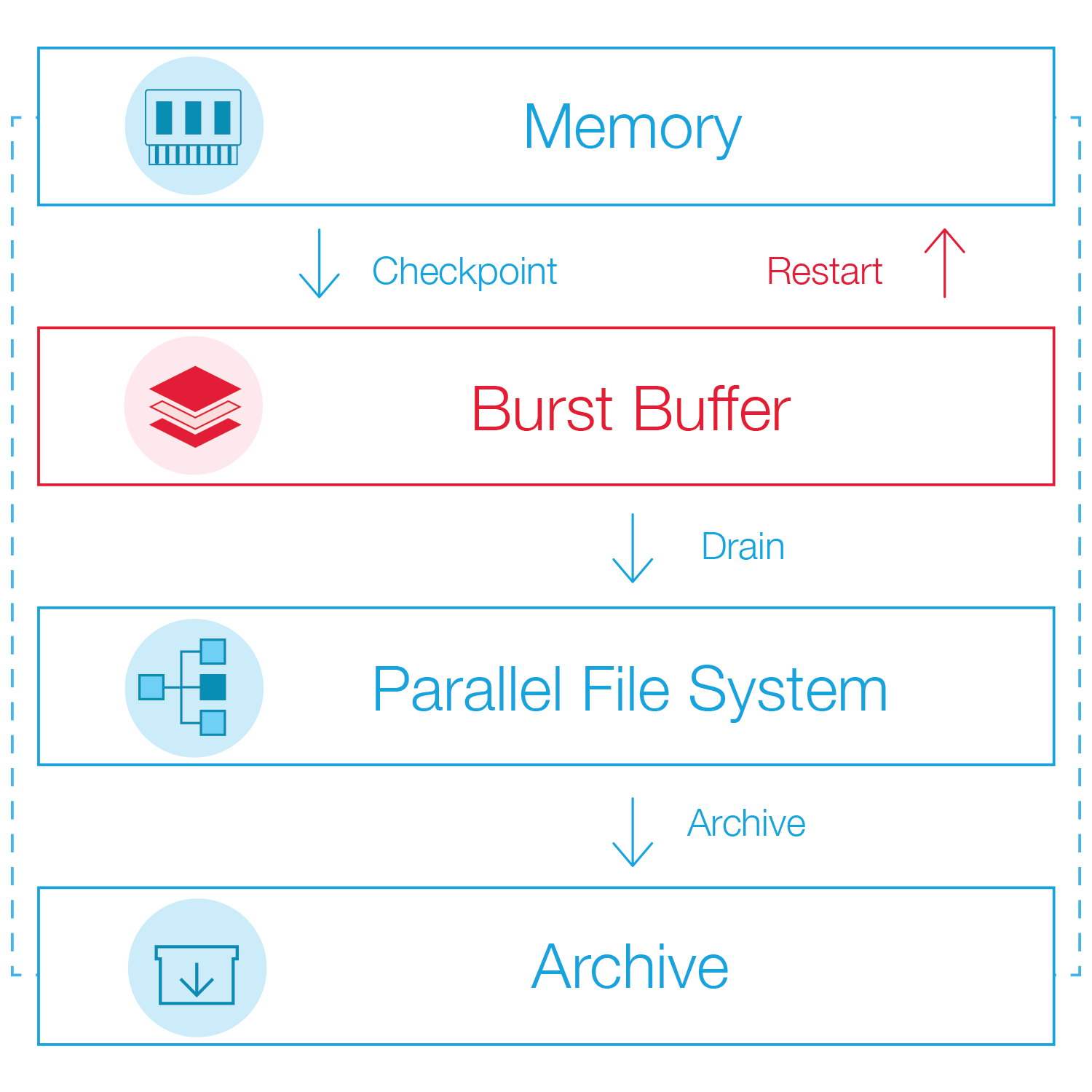

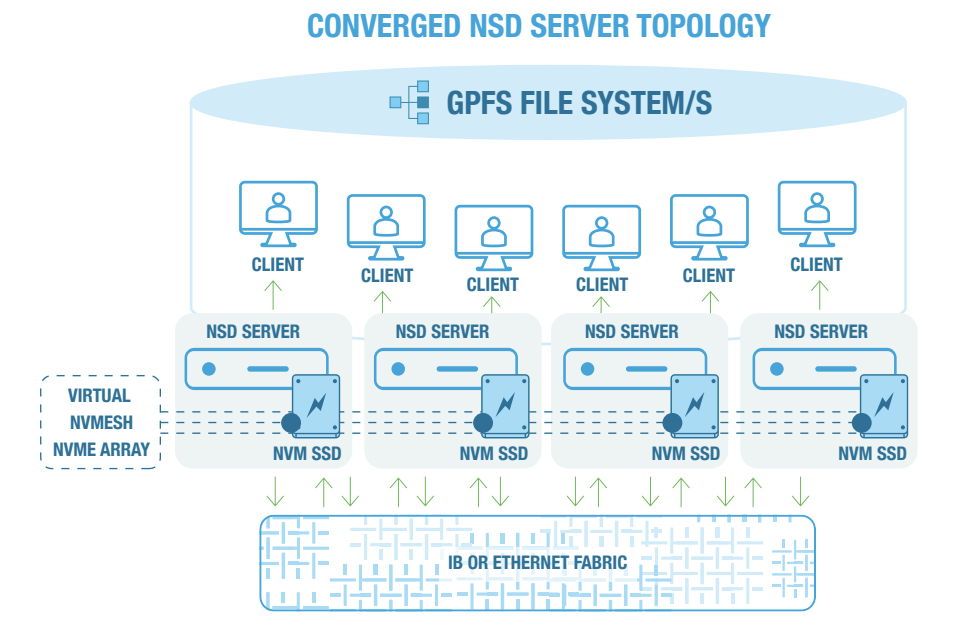

SciNet: – SciNet purchased Excelero to pool NVMe flash for burst buffer storage in our facility, which is Canada’s largest supercomputing center. A burst buffer is a fast intermediate layer between the non-persistent memory of the compute nodes and the storage that enables fast checkpointing, so that computing jobs can be easily restarted. Since our users run large-scale modeling, simulation, analysis and visualization applications sometimes run for weeks, interruptions to their work can sometimes destroy the result of an entire job. To avoid interruption we implemented this burst buffer based on Excelero’s NVMesh software-defined block storage on the IBM Spectrum Scale parallel file system.

While we acquired NVMesh for use in a burst buffer, our IT team quickly discovered that our user workloads themselves could greatly benefit from storage that had much greater bandwidth and better responsiveness, especially with high IOPs use cases – something that’s extremely hard if not impossible to achieve with spinning disk. Over time this use case predominated, demonstrating the value of the NVMesh platform’s flexibility.

2). Why is NVMe well-suited for your application?

SciNet: Checkpoints are typically saved in a shared, parallel file system, and for this SciNet use IBM Spectrum Scale (formerly GPFS). But as clusters become larger and the amount of memory per node increases, each individual checkpoint becomes larger and either takes more time to complete or requires a higher-performance file system.

Sharing NVMe Flash resources enterprise-wide helps us complete checkpoints within 15 minutes to meet availability SLA’s. NVMesh also helps assure that researchers complete their analyses fast and efficiently.

3). How does Excelero benefit your production NVMe deployment?

3). How does Excelero benefit your production NVMe deployment?

SciNet: NVMesh has given us a petabyte-scale unified pool of distributed high-performance. We have a vast and highly efficient deployment that includes 80 pooled NVMe devices, 148 GB/s of write burst (device limited), 230GB/s read throughput across the network. This provides us with well over 20M random 4K IOPS.

With NVMesh, SciNet easily meets the 15 minutes checkpoint window, has a storage infrastructure that is extremely cost effective, and provides what we consider unheard-of burst buffer bandwidth.

By adding commodity flash drives and NVMesh software to compute nodes, and to a low-latency network fabric that was already provided for the supercomputer itself, the system provides redundancy without impacting target CPUs. This enables standard servers to go beyond their usual role in acting as block targets – the servers now can also act as file servers.

4) What’s next for SciNet’s advanced infrastructure?

SciNet: We have a policy of making minimal changes to an IT environment once it’s up and running, to assure maximum uptime for our users. NVMesh has worked so well and been so stable that we’ve never needed to expand the infrastructure, even when we began using the solution for end-user workloads which was beyond our initial burst buffer plans. No matter what our users throw at it, NVMesh works incredibly efficiently.

5) What can your center do today that it couldn’t before?

5) What can your center do today that it couldn’t before?

SciNet: With pooled NVMe, SciNet gained important storage functionality with the highest performance available in the industry at a significantly reduced price – while assuring vital scientific research can progress swiftly.