The recently announced NVIDIA EGX A100 allows AI applications to automate the critical infrastructure of the future. NVIDIA EGX is a cloud-native NVIDIA edge AI platform that brings GPU-accelerated AI to everything from traditional, commercial dual-socket x86 servers to Jetson Xavier NX micro-edge servers. The new platform is well complemented by our Elastic NVMe software-defined storage.

As a leading storage partner, we are proud to bring high-performance storage expertise to the NVIDIA EGX edge ecosystem.

Download Use CaseDownload Use CaseThe Rise of the Edge Data Center

The rise of the edge data center is well known. AI compute at the edge, until now a challenging endeavor, will become more important and widespread with the rise of 5G, allowing even more devices to send data to edge servers. The huge quantities of streaming data come from various sensors and make a huge impact on top of the ever-increasing bandwidth from video.

Edge data centers are particularly challenging as they are generally smaller and need to be as efficient as possible with regards to power, space and cooling. The NVIDIA edge ecosystem features many server vendors using NVIDIA EGX to build edge servers alongside hundreds of AI applications, enterprise management, cloud native, software-defined networking and network security companies. Excelero is proud to add Elastic NVMe software-defined storage to this offering. AI inference, real-time analytics and databases at the edge require storage that is both low latency and high bandwidth.

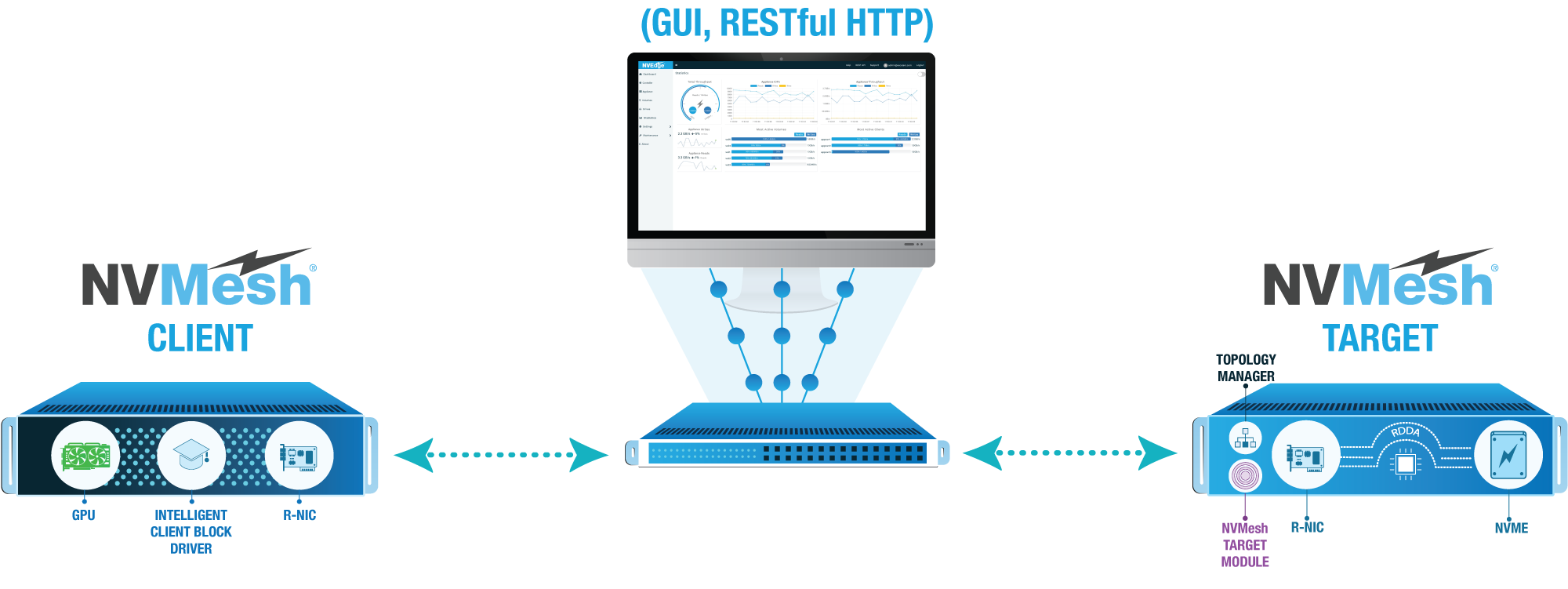

Excelero’s NVMesh is the industry’s most flexible deployment model for Elastic NVMe. It is deployed as a virtual, distributed non-volatile array and supports both converged and disaggregated architectures. This enables companies to meet performance and capacity requirements within the restrictions of their edge data centers. NVMesh ensures edge applications get the highest storage performance with the best economics at any scale.

Elastic NVMe for the Edge

Excelero realizes that different use cases will call for different levels of performance, power utilization and cost. Excelero’s Elastic NVMe technology has been architected and optimized to support any current or future hardware platform. In addition to supporting dual-socket x86 servers, of particular interest for edge deployments today are our efforts to integrate with NVIDIA Mellanox BlueField SmartNIC architectures, which offer lower power consumption and cost.

Excelero realizes that different use cases will call for different levels of performance, power utilization and cost. Excelero’s Elastic NVMe technology has been architected and optimized to support any current or future hardware platform. In addition to supporting dual-socket x86 servers, of particular interest for edge deployments today are our efforts to integrate with NVIDIA Mellanox BlueField SmartNIC architectures, which offer lower power consumption and cost.

What makes the NVMesh architecture truly unique as an AI storage solution at the edge is the controller-less design. When deployed on NVIDIA Mellanox SmartNICs, the storage functionality is fully contained within those SmartNICs, which allows for high performance and logical storage acceleration. Also, as storage traffic bypasses the CPU thanks to GPUDirect Storage and RDDA, both physical and thermal (Watts-per-IO) footprints are reduced to the most efficient levels.

Highest Performance at Smallest Scale

So what performance can be expected at the edge? The Excelero and NVIDIA networking teams just completed a ResNet-50 Imagenet Benchmark. ResNet-50 is a neural network of 50 layers commonly used for training on images. The act of training with such a neural network with images from the ImageNet dataset is a typical benchmark used to validate a Deep Learning training system’s capabilities.

So what performance can be expected at the edge? The Excelero and NVIDIA networking teams just completed a ResNet-50 Imagenet Benchmark. ResNet-50 is a neural network of 50 layers commonly used for training on images. The act of training with such a neural network with images from the ImageNet dataset is a typical benchmark used to validate a Deep Learning training system’s capabilities.

The benchmark was run on top of NVIDIA’s networking solutions and Excelero’s NVMesh (Nvme storage solution). Native Kubernetes was used as a platform with the Horovod distributed training framework for TensorFlow and Kubeflow MPI operator to orchestrate the workload job. Two nodes were used as distributed worker nodes, each with a single NVIDIA V100 Tensor Core GPU, an NVIDIA Mellanox ConnectX-5 100GbE SmartNIC dedicated for the training traffic between nodes and an NVIDIA Mellanox BlueField SmartNIC running NVMe SNAP to provide the interface to the storage fabric. NVIDIA Mellanox SN2700 100GbE Spectrum Switches were used for both compute and storage fabrics.

NVMe-oF over RoCE was used for high-speed low-latency communication between the BlueField I/O processing unit (IPU) and the small Excelero NVMesh cluster of 4 nodes in 2 rack units. The storage connection was accelerated end-to-end between the BlueField IPU (Initiator) and NVMesh cluster (Target). A high-capacity ImageNet training dataset was maintained and updated in a single, shared logical NVMesh logical volume exposed to the GPU workers and emulated as a local NVMe drive using NVMe SNAP on the BlueField IPU preventing the need for an CSI or any other agent on the worker hosts.

Using this setup, a stable result of 2,454 images/sec was achieved with a batch size of 256. Network utilization was 4.5 Gbps on the compute fabric and 1.25 Gbps on the storage fabric. The jitter is negligible with results remaining stable for hours. These results are in line with various publicly available benchmark results that show the NVIDIA GPUs achieving ~1,250 images/sec with this set of software. The network utilization was very low compared to the system’s potential and the same can be said for the storage system provided, which shows how much potential such a setup has.

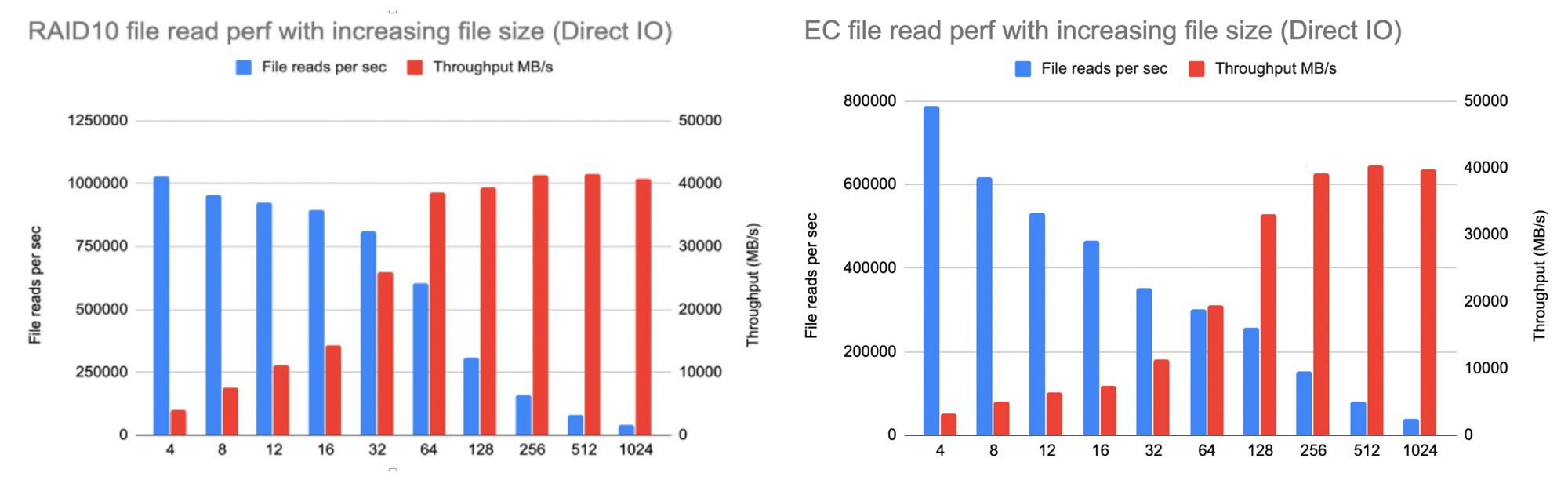

The following graphs show the results obtained using the same NVMesh cluster with local file system on top of NVMesh. They demonstrate the outstanding potential for such a small footprint in terms of file system operations.