Sven Breuner, Field CTO, Excelero

Part 2 of 3

In the first part of this series, we discussed the similarities and differences between HPC and AI storage solutions with a special focus on the storage aspects. In this second part, a closer look at the 3 Phases of Deep Learning will provide us with a better understanding of what the storage requirements for Deep Learning are, given that DL is generally considered to be one of the most important drivers for high performance computing storage nowadays. After that, we will then have all we need to design an optimal storage system for scalable Deep Learning in the third and final part.

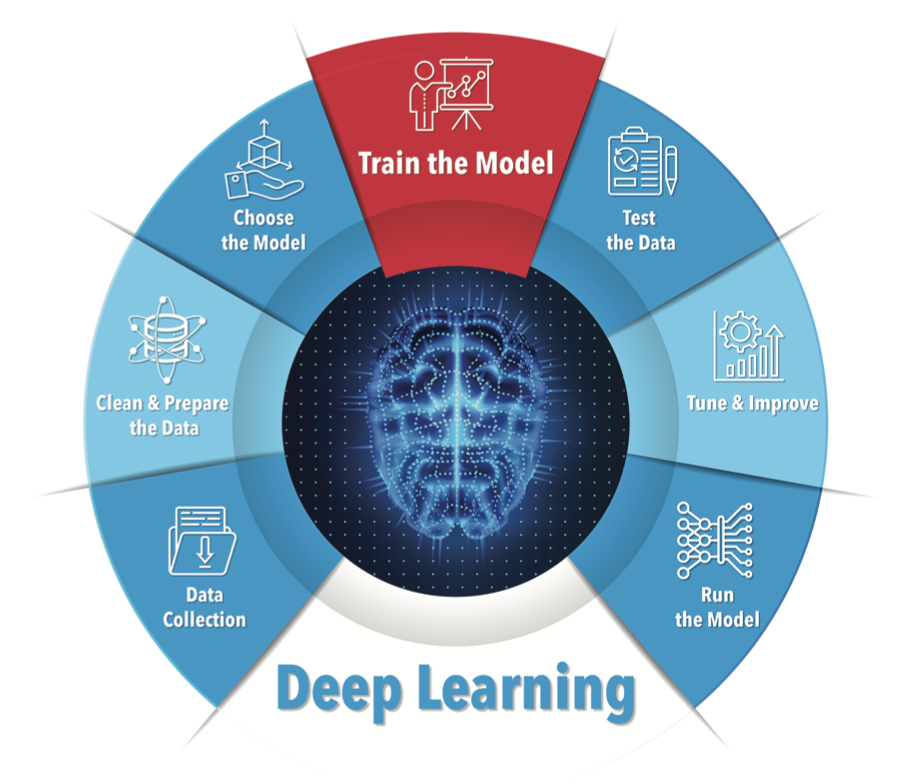

The 3 Phases of Deep Learning

Phase 1: Data Preparation – Extract, Transform, Load (ETL)

The goal of this phase is to have the dataset readily available in a format that is suitable for training. Reasons for this might be that the data comes from a source that produces data in a format that is not suitable for training, e.g. because of the used color palette, resolution, position of relevant objects inside an image, and so on.

Phase 2: Training

The goal of the training phase is to teach “the computer” to recognize something of interest. Popular examples for this are persons, cats, or traffic signs.

But how can a computer learn to recognize a cat? The answer is trivially simple on the one hand, but at the same time the major challenge for traditional storage systems: The computer (or rather the GPUs) need to see lots and lots of cats in all colors, from different angles in different poses and sizes. And when each image has been processed once, the whole dataset is typically processed again and again in a different order, with a different image rotation factor, stretched or in other variations, as all this improves the accuracy of the recognition.

Also, the data scientists in a company are continuously working on improving the model generation based on the existing training dataset, which makes training an endless process that is running continuously in a company to improve the company’s product and stay ahead of the competition.

In clusters of multiple GPU systems, a certain GPU server typically doesn’t read the same subset of objects again that it read in the first pass, due to the random reordering of data. This means a cache on the GPU server would not be effective and thus the storage system itself has to be able to provide data at the speed at which the GPUs can process it – so very, very fast. Due to the nature of accesses consisting of lots of small file reads, NVMe has proven to be the only technology today that fits for these requirements, providing both, the ultra-low access latency and the high number of read operations per second.

Another important observation for GPU server clusters is that the dataset should be shared between the GPU servers, as they typically all work with the same dataset.

The fact that this phase is mostly about reading of data is an important property that allows some very interesting optimizations of the solution design, as we will see in the following chapters.

Phase 3: Inference

The goal of the inference phase is to put the trained model to the test by letting the computer recognize whatever objects it has been trained on. So this is the actual application for which we went through all the effort in the training phase.

In the inference phase, object recognition typically happens in real-time. For example in security-sensitive areas, if the purpose of the model is to recognize persons, there will be a continuous live feed of cameras that needs to be analyzed. And it’s usually not good enough to recognize that an object is a person, the goal rather is to recognize if this a certain person of interest from a database.

This means the latency of data access becomes a critical factor to keep up with the live feed.

Again, NVMe has proven to be a best-fitting technology for such latency-sensitive use cases in general. But the fact that there are large differences in the amount of persistent data that needs to be accessed during an inference phase, still leaves the training as the most widely recognized critical phase for storage system performance. Thus, we will focus on designing an optimal solution for training workloads in the third and final part of this series.